Dr Xander van Lill

The use of cognitive ability in selection

A CLASSIFICATION OF cognitive ability

Psychological and human resource professionals must have a good knowledge of what is meant by general, broad, and specific mental ability and be able to make informed choices between specific measures of cognitive ability depending on the job requirements.

Download page as pdfIntroduction

The predictive power of cognitive ability for job performance has been established through several meta-analytical studies (Salgado et al., 2003; Salgado & Moscoso, 2019; Schmidt et al., 2008; Schmidt et al., 2016).

Since Schmidt and Hunter's (1998) seminal article on the utility of different selection methods, a new procedure has been implemented that corrected for downward bias caused by range restriction in the initial methodology used (Schmidt et al., 2016).

Besides these legal aspects, there are other benefits to the organisation that fairness in the selection process can bring about. Research into the perception of fairness in the US Military’s selection process showed that applicants who experienced the process to be fair were more likely to accept the job offer than those who did not (Harold et al., 2016).

Based on corrections for range restrictions conducted by Schmidt et al., (2008), Schmidt et al. (2016) estimate that proper measures of cognitive ability should produce a 65% gain in job performance if a perfectly accurate selection system is used.

Based on this finding, Schmidt et al. (2016) argue that properly developed measures of cognitive ability should play a central role in selection processes due to its predictive validity.

Other reasons for its utility include (Schmidt et al., 2016):

- The relatively low costs involved in administrating these tests.

- The theoretical foundation of cognitive ability is solid. The construct measured by cognitive ability is much clearer than the constructs measured by, for example, assessment centres and situational judgement tests.

Given the value that cognitive ability adds to the selection process, it is likely that the use of these tools is likely to continue to grow (cut-e, 2016; SHL, 2018).

That said, psychological and human resource professionals must have a good knowledge of what is meant by general, broad, and specific mental ability and be able to make informed choices between specific measures of cognitive ability depending on the job requirements.

This white paper will attempt to provide a brief classification system for cognitive ability and its implications for the selection process. Other considerations when assessing cognitive ability will also be outlined.

A CLASSIFICATION OF COGNITIVE ABILITY

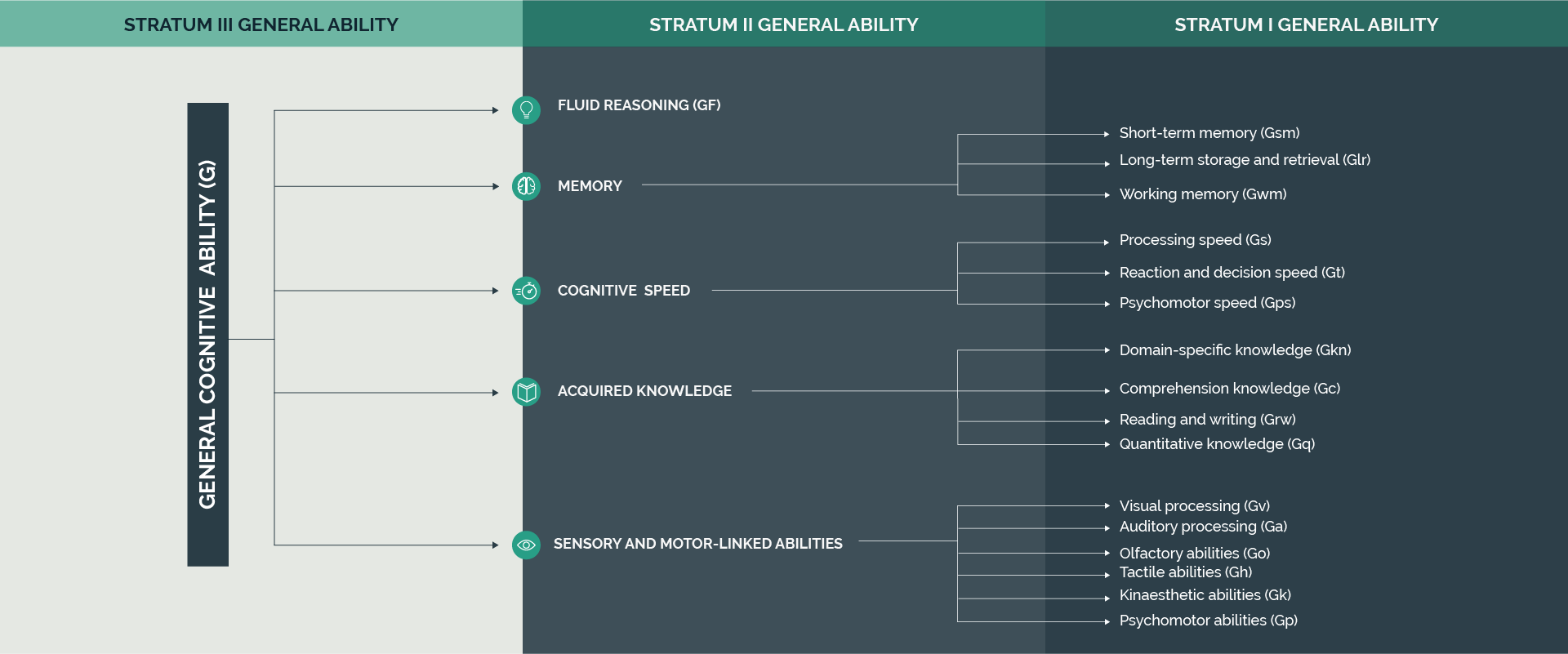

Exceptional work has been done by Carroll (1993) to understand the hierarchical structure of cognitive ability by analysing over 460 cognitive ability correlation matrices (Ones et al., 2012). Carroll's (1993) three-stratum theory, along with Horn and Cattell's (1966) theory of fluid and crystallised intelligence, have become seminal works that have greatly advanced our understanding of general and specific cognitive abilities.

The integrated work of Horn and Cattell (1966) and Carroll (1993), often referred to as the Cattell-Horn-Carroll (CHC) model of intelligence, has become one of the most useful theoretical and empirical ways to think about the scientific structure of cognitive ability (McGrew, 1997; Schneider & McGrew, 2012). Schneider and McGrew (2012), as depicted in Figure 1, proposed a conceptual outline of general, broad, and specific abilities, which will be used as a guide throughout the white paper.

General cognitive ability is situated above all the specific cognitive abilities in Figure 1 (Schneider & McGrew, 2012). Specific abilities have something in common due to the size of the correlations between specific abilities, namely a general factor of cognitive ability, also known as general mental ability (Carroll, 1993; Keith & Reynolds, 2012; Taub & McGrew, 2004).

The general factor of cognitive ability is considered to be a good predictor of performance across different occupations (Ones et al., 2012; Schmidt et al., 2016). There is evidence that suggests that specific abilities do not provide incremental validity over general cognitive ability when predicting job and training performance (Brown, Le, & Schmidt, 2006; James & Carretta, 2002).

RECOMMENDATIONS

Depending on the context, we recommend that a battery of specific cognitive ability assessments is used, from which a general factor of cognitive ability can be extracted. If this is not possible, fluid intelligence (Gf) is a very strong representation of general cognitive ability (Keith & Reynolds, 2012; Ones, Viswesvaran et al., 2012) and could be administrated as a standalone assessment.

An assessment of fluid intelligence might be particularly useful when applicants’ acquired ability, such as English reading ability, is limited (De Bruin et al., 2005). It is often not feasible to administer a battery consisting of every specific ability in the CHC model and it might be advisable to select a battery of two or three specific cognitive abilities that are related to the characteristics of an occupation.

In the following sections, each of the broad and specific abilities will receive special attention. Peterson et al. (2001) indicate that the occupational information network (O*NET OnLine, https://www.onetonline.org/) provides a useful framework to link cognitive abilities to the work characteristics of specific occupations. Pässler, Hell, and Beinicke's (2015) meta-analytical findings on the correlations between career interest and cognitive abilities, provide further insights on the way abilities could tilt individuals towards specific occupations.

For this discussion, O*NET OnLine and findings based on the meta correlations between cognitive ability and career interest will be used to provide a general guideline on selecting specific abilities depending on the characteristics of the job.

FLUID INTELLIGENCE (GF)

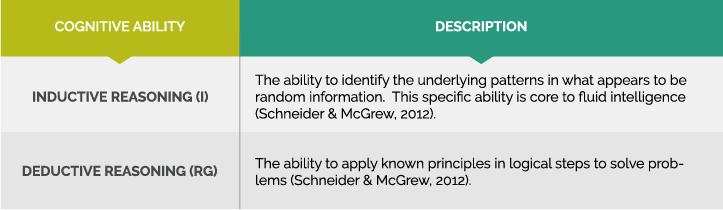

Fluid intelligence refers to the ability to solve unfamiliar and complex problems that cannot be performed by relying on what was previously learned (McGrew, 2009; Schneider & McGrew, 2012). Abilities that are closely associated with fluid intelligence are discussed in Table 1.

Specific cognitive abilities associated with fluid intelligence (Gf)

Given that fluid intelligence is such a strong representation of the general factor of intelligence (Ones et al., 2012), this construct might be predictive of job performance across occupations. From a career interest point of view, it would appear that a high fluid intelligence might tilt a person towards occupations in the investigative and realistic fields (Pässler et al., 2015).

Examples of occupations that may utilise fluid intelligence as a specific cognitive skill (National Center for O*NET Development, 2020):

neuropsychologists

paediatricians

judges

police detectives

epidemiologists

geneticists

air traffic controllers

nuclear medicine physicians

statisticians

veterinarians

Insight 1:

There is a strong relationship between fluid intelligence and multitasking (Konig, Buhner, & Murling, 2005), which has predictive implications for occupations like bus drivers, firefighters, and gaming dealers (Fleishman et al., 1999).

MEMORY

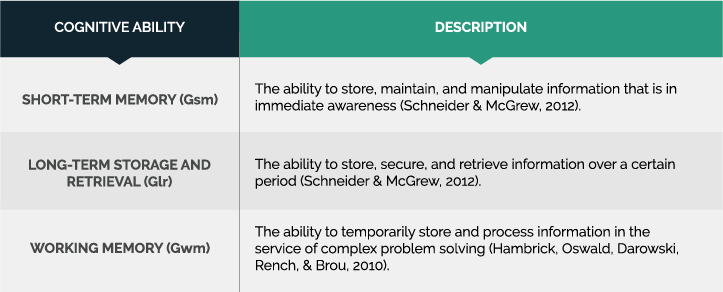

Memory, in broad, refers to the ability to store and retrieve information (McGrew, 2009; Schneider & McGrew, 2012). Memory is broken down in three separate dimensions that are outlined in Table 2.

Specific cognitive abilities associated with memory

Examples of occupations that may utilise memory as a specific cognitive skill (National Center for O*NET Development, 2020):

actors

clergy

singers

physicists

nurse anaesthetists

bioinformatics scientists

choreographers

sales representatives

CHIEF EXECUTIVES

RADIO ANNOUNCERS

Insight 2:

There is a strong correlation between working memory and fluid intelligence (Chuderski, 2015; Hambrick et al., 2010; Kane et al., 2004). Given that fluid intelligence is such a strong representation of the general factor of intelligence, it is also possible that working memory by proxy could also be predictive of job performance across occupations (Ones, et al., 2012). Similar to fluid intelligence, working memory is predictive of multitasking (Hambrick et al., 2010).

COGNITIVE SPEED

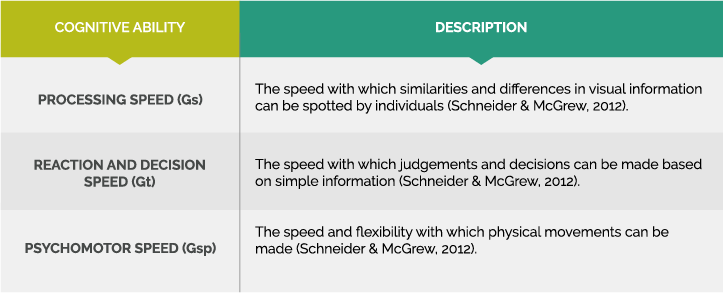

Cognitive speed refers to the ability to effectively and efficiently perform straightforward and repetitive mental tasks (McGrew, 2009; Schneider & McGrew, 2012). Cognitive speed is broken down into three separate dimensions outlined in Table 3.

Specific cognitive abilities associated with cognitive speed

Cognitive speed, especially processing speed, might slightly tilt individuals to more conventional careers where the frequent checking of information might be required (Pässler et al., 2015).

Examples of occupations that may utilise cognitive speed as a specific cognitive skill (National Center for O*NET Development, 2020):

AIR TRAFFIC CONTROLLERS

AIRLINE PILOTS

NUCLEAR TECHNICIANS

SAILORS

FIRE INSPECTORS

DESKTOP PUBLISHERS

CRITICAL CARE NURSES

FILE CLERKS

LIBRARY ASSISTANTS

FORENSIC TECHNICIANS

Insight 3:

A study conducted with warehouse workers (low complexity jobs) found that perceptual speed explained incremental variance on top of general mental ability in predicting task performance (Mount et al., 2008). The finding might have predictive implications for other low complexity occupations as well.

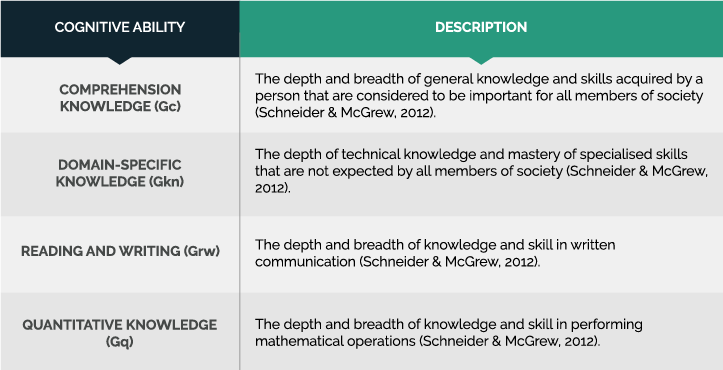

ACQUIRED KNOWLEDGE

Acquired knowledge can be defined as the ability to solve problems based on prior knowledge obtained through formal or informal education (Kevin S. McGrew, 2009; Schneider & McGrew, 2012). Acquired knowledge comprises four specific abilities, which are described in Table 4.

Specific cognitive abilities associated with acquired knowledge

Given the frequent use of verbal and numerical assessments in practice, both will receive special attention in this discussion. Verbal abilities might tilt individuals towards investigative and artistic occupations (Pässler et al., 2015).

Examples of occupations that may utilise verbal ability as a specific cognitive skill (National Center for O*NET Development, 2020):

EDITORS

CREATIVE WRITERS

ENGLISH TEACHERS

HISTORY TEACHERS

CLINICAL PSYCHOLOGISTS

ANTHROPOLOGISTS

POLITICAL SCIENTISTS

LAWYERS

EQUAL OPPORTUNITY OFFICERS

COPYWRITERS

A high numerical ability might tilt a person towards realistic and investigative occupations (Pässler et al., 2015). By contrast, a low numerical ability might tilt individuals toward artistic and social occupations (Pässler et al., 2015).

Numerical ability might be important for but is by no means limited to, the ten occupations listed below (National Center for O*NET Development, 2020):

MATHEMATICIANS

STATISTICIANS

PHYSICISTS

ACTUARIES

FINANCIAL ANALYSTS

QUANTITY SURVEYORS

ECONOMISTS

ACCOUNTANTS

CHEMICAL ENGINEERS

CIVIL ENGINEERS

Insight 4:

According to Ones et al. (2012), acquired abilities such as verbal and numerical ability, are some of the most reliable specific abilities that can be measured, which might be the reason for their frequent use in selection. That said, in a study conducted by Lubinski (2004), general mental ability accounted for about 50% of the common variance in a specific cognitive ability test, whereas verbal, quantitative, and spatial abilities accounted for only 10% of the remaining variance. Subsequently, when administering a battery composing of verbal, numerical, and another assessment, it might still be meaningful to extract a general score.

Insight 5:

It is worth mentioning that mechanical knowledge, which refers to the ability to operate machines, tools, and equipment, can be classified under Domain-Specific Knowledge (Gkn) based on the CHC model (Schneider & McGrew, 2012). It is mentioned separately in this section because it is reported to have the highest correlation with realistic interests (Pässler et al., 2015). Subsequently, this might be an important dimension to consider in the selection of persons for occupations like machine operators, farmers, and construction workers (National Center for O*NET Development, 2020).

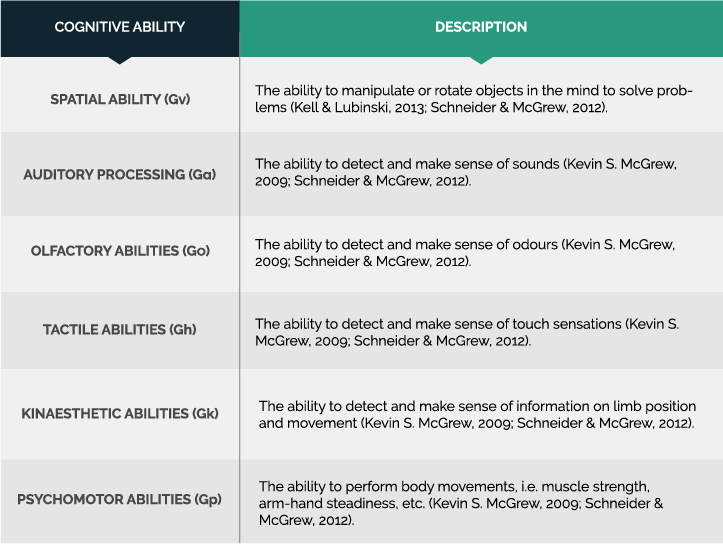

SENSORY AND MOTORLINKED ABILITIES

Sensory and motor-linked abilities refer to the accurate detection of body movements in reaction to sensory information (Kevin S. McGrew, 2009; Schneider & McGrew, 2012). Sensory and motorlinked abilities can be broken down into six specific abilities, which are described in Table 5.

Specific cognitive abilities associated with sensory and motor-linked abilities

Eliot and Czarnolewski (2007) indicate that, because of the pervasive use of spatial reasoning in human’s daily lives, the ability is often taken for granted. That is until dangerous spatial errors are made, such as misjudging the distance of another car (Eliot & Czarnolewski, 2007). Kell and Lubinski (2013) indicate that, although spatial reasoning has been established as an important predictor in talent identification over thousands of studies, it is often neglected in favour of other specific abilities, such as verbal and numerical reasoning.

Of the sensory and motor-linked abilities, spatial reasoning will receive special attention in this section. Spatial reasoning has the strongest meta-analytical correlation with realistic and investigative career interests of all specific abilities investigated by Pässler et al. (2015).

Examples of occupations that may utilise spatial reasoning as a specific cognitive skill (National Center for O*NET Development, 2020):

ARCHITECTS

SCULPTORS

ENGINEERS

FLORAL DESIGNERS

PILOTS

TRUCK DRIVERS

LAND SURVEYORS

PARKING LOT ATTENDANTS

INTERIOR DESIGNERS

HUNTERS

Insight 6:

In accordance with the fact that spatial reasoning might tilt a person towards realistic and investigative career preferences (Pässler et al., 2015), spatial reasoning has consistently been proven to be predictive of job and training performance in the STEM (science, technology, engineering, and mathematics) fields (Kell & Lubinski, 2013; Wai et al., 2009). Spatial reasoning also appears to play a unique and important role in technical innovation (Kell, et al., 2013).

Subsequently, if this variable is not included in selection processes for the STEM fields, employers might be focussing on the wrong information to select potential talent (Kell & Lubinski, 2013; Wai et al., 2009). Based on a meta-analytical study conducted by Reilly and Neumann (2013), masculine gender-roles are reported to contribute to the development of spatial reasoning abilities. The predictive implications of differences in gender-roles is something that should be considered in selection processes for the STEM fields.

CONSIDERATIONS WHEN ASSESSING COGNITIVE ABILITY

A CLASSIFICATION OF COGNITIVE ABILITY

It is commonly known that structured interviews have higher incremental validity than unstructured interviews over cognitive ability in predicting job performance (Schmidt et al., 2016). However, what might be less well known is that the higher incremental validity reported for structured interviews could be attributed to the higher correlation between unstructured interviews and cognitive ability (Schmidt et al., 2016).

Some explanations that have been forwarded include that structured interviews, relative to unstructured interviews, more accurately capture job knowledge and experience, and as a result could explain unique variance on top of cognitive ability tests - not just on-the-spot reasoning based on unstructured questions (Cortina et al., 2000).

It is also worth mentioning that applicants are more likely to display overconfidence in unstructured interviews, which could negatively affect or bias selection decisions (Kausel et al., 2016).

RECOMMENDATIONS

If your organisation is considering using interviews along with psychometric assessments, it might be useful to have a human resource specialist run critical incident techniques. With critical incidents techniques subject matter experts help to identify (1) circumstances where employees had to demonstrate a specific behaviour, (2) what employees did in the circumstances, and (3) what the outcome was (Klehe & Latham, 2006).

The information obtained from critical incident techniques can be used to develop a dilemma and the subject matter expert could be asked to help develop a scoring guide where different ratings could be assigned for different behaviours.

Structured situational interviews, where applicants may be asked to respond to a specific dilemma, can then be used to capture applicants’ job-specific knowledge and skills (Banki & Latham, 2010; Christina & Latham, 2004; Latham & Sue-Chan, 1999).

DIFFERENCE BETWEEN LEADER AND FOLLOWER INTELLIGENCE

There is sufficient meta-analytical evidence to suggest that cognitive ability predicts leadership effectiveness (Bono & Judge, 2004). However, this relationship might not always be that simple. Antonakis et al., (2017) reports that “super smart” leaders could have a too-much-of-a-good-thing effect on effectiveness for three reasons.

Firstly, a too large gap between the cognitive abilities of leaders and their followers could result in followers perceiving their leader to be intellectually superior (Simonton, 1985).

Secondly, a too large gap between the intelligence of leaders and followers might reduce followers’ ability to comprehend what leaders are saying and, as a result, negatively influence leadership effectiveness (Simonton, 1985).

Thirdly, leaders who have far greater cognitive abilities than their followers are more likely to be perceived as overly critical because of their ability to spot more errors in the reasoning of their followers (Simonton, 1985).

Followers’ negative perceptions of “super intelligent” leaders might weaken the transformational effect that the leaders could have in organisations, especially if the gap between the mean cognitive ability of the team and the leader exceeds a certain threshold.

RECOMMENDATIONS

If your organisation is considering using interviews along with psychometric assessments, it might be useful to have a human resource specialist run critical incident techniques. With critical incidents techniques subject matter experts help to identify (1) circumstances where employees had to demonstrate a specific behaviour, (2) what employees did in the circumstances, and (3) what the outcome was (Klehe & Latham, 2006).

The information obtained from critical incident techniques can be used to develop a dilemma and the subject matter expert could be asked to help develop a scoring guide where different ratings could be assigned for different behaviours.

Structured situational interviews, where applicants may be asked to respond to a specific dilemma, can then be used to capture applicants’ job-specific knowledge and skills (Banki & Latham, 2010; Christina & Latham, 2004; Latham & Sue-Chan, 1999).

COGNITIVE ABILITY AND ITS RELATIONSHIP WITH SPECIFIC PERFORMANCE DIMENSIONS

Ones et al. (2012) found a stronger relationship between cognitive ability and in-role performance than cognitive ability and overall performance. In-role performance (task performance) can be defined as tasks that are related to the technical core of the organisation (Borman & Motowidlo, 1997). Alonso et al. (2008) reported a small and negligible correlation between cognitive ability and extrarole performance (contextual performance).

Extra-role performance can be defined as those additional helpful behaviours, which is not necessarily expected by the job description, that employees do in order to create a social climate for team performance (Borman & Motowidlo, 1997). It would appear that personality dimensions, such as conscientiousness and agreeableness, are better predictors of extra-role performance than cognitive ability (Ones et al., 2012). Cognitive ability appears to have a moderate and meaningful negative correlation with counterproductive performance (Ones et al., 2012).

Counterproductive performance can be defined as wilful acts aimed at harming organisations or individual members associated with it (Spector et al., 2006). Individuals with higher cognitive abilities might be more likely to anticipate the negative consequences of rule-breaking behaviour and, as a result, might be more inclined to refrain from it or overcome negative situations by solving problems (Dilchert et al., 2007).

RECOMMENDATIONS

Job performance is a multifaceted construct composing of several dimensions (Campbell & Wiernik, 2015). Even though cognitive ability might be a good predictor of overall job performance, it does not provide a complete predictive picture of the multifaceted nature of job performance (Ones et al., 2012; Schmidt et al., 2016).

We recommend that a battery of assessments composing of cognitive ability, personality, emotional intelligence, and career interest is used when predicting job performance.

The existence of collective intelligence

Woolley et al. (2010) reported that collective intelligence, where the group performs a wide variety of cognitive ability tasks together, is a stronger predictor of group performance than the sum of individual cognitive abilities. These cognitive tasks could include brainstorming together, solving visual puzzles together, making collective judgements, and negotiating limited resources (Woolley et al., 2010).

This relationship also appears to be stronger when interactional elements, such as greater conversational turn-taking in the group, social sensitivity, and more females are present (Woolley et al., 2010). While this finding has received widespread attention in academia and the media, a metaanalysis conducted by Credé and Howardson (2017) indicated that, at this stage, there is limited support for the existence of a general factor of collective intelligence.

The construct might hold promise for a multilevel way to look at cognitive ability (Woolley et al., 2010) but, for now, more programmatic research is required before important practical decisions can be based on the concept of collective intelligence.

RECOMMENDATIONS

If the aim is to predict team effectiveness, it might be worth looking at other predictors of team effectiveness rather than collective intelligence, such as team conflict, efficacy, empowerment, climate, shared mental models, mean personality, goal clarity, goal orientation, diversity, network features, and leadership (Credé & Howardson, 2017; Mathieu et al., 2008).

limitations

Inescapable controversies surround the use of cognitive ability tests for selection decisions. It would be naïve not to mention these controversies in this white paper.

DIFFERENCES IN COGNITIVE ABILITY TEST SCORES BETWEEN ETHNIC GROUPS

A first major controversy regarding general mental ability includes an acknowledgement of subgroup differences that exist between people of different ethnic origins on dimensions measured by cognitive ability tests (Ones et al., 2012; Sackett et al., 2008; Viswesvaran & Ones, 2002).

However, it is worth mentioning that there is a lot more within-group (six standard deviations) than between-group variability (one standard deviation), which strongly suggests that there are exceptionally intelligent individuals in all ethnic groups (Roth et al., 2001).

There is a large body of evidence that suggests that differences in cognitive ability can, in part, be attributed to environmental factors such as educational quality or post-natal conditions that are often associated with socio-economic status (Rindermann & Thompson, 2016; Sauce & Matzel, 2018; Trahan et al., 2014).

When cognitive ability tests are used for selection purposes, the risk of adverse impact on individuals from previously disadvantaged groups is much higher (Outtz, 2002). This risk is pronounced in countries like South Africa where differences in socio-economic status can be attributed to the legacy of apartheid (Van Eeden & De Beer, 2018).

RECOMMENDATIONS

To reduce the adverse impact of cognitive ability, it is important to include other predictors that have shown to display less adverse impact between ethnic groups, such as personality assessments or structured interviews (Outtz, 2002). Careful consideration could also be given the construction of norms for cognitive ability tests across cultural groups to control for the adverse impact that cognitive ability scores could have (Roodt & De Kock, 2018; Van Eeden & De Beer, 2018).

Psychologists should further do everything in their power to ensure that no stereotypes about different ethnic groups are reinforced during test administration or the reporting of results for decision-making (Aguinis et al., 2016).

CROSS-CULTURAL EQUIVALENCE IN COGNITIVE ABILITY TESTS

A second limitation includes the historically Western origin of cognitive ability tests (Schaap, 2011; Van De Vijver, 1997; Van Eeden & De Beer, 2018). More specifically, different cultural groups’ familiarity with the content used in cognitive ability tests, for example, whether the test is conducted on first or second language English speakers, might influence scores on the tests (Malda et al., 2010). This biased effect could result in an adverse impact against individuals from non-Western descent when used for selection decisions (Schaap, 2011).

RECOMMENDATIONS

It is essential that equivalence in the measurement of cognitive ability is proved, especially if tests were developed in western contexts before it is used for selection decisions on non-Western populations (Schaap, 2011). Three types of equivalence of measures should be investigated, namely construct, method, and item equivalence (Van De Vijver, 2002; Van Eeden & De Beer, 2018).

Construct equivalence refers to the uniformity in which the psychological constructs is measured across different culture groups (Van De Vijver, 2002; Van Eeden & De Beer, 2018). Method equivalence refers to the investigation of practical application issues, such as the language in which instructions for a cognitive test are provided, that could lead to differences in scores between culture groups (Van De Vijver, 2002; Van Eeden & De Beer, 2018).

Item equivalence is ensured when it can be proven that cultural groups do not react differently to items due to differences in understanding its meaning (Van De Vijver, 2002; Van Eeden & De Beer, 2018).

DIFFERENCES IN COGNITIVE ABILITY TEST SCORES BETWEEN BINARY GENDERS

A third major controversy regarding mental ability includes an acknowledgement of differences between gender roles on specific cognitive abilities. Men are tilted towards and perform better in tests of numerical reasoning, mechanical reasoning, and spatial reasoning (Brunner et al., 2008; Lemos et al., 2013; Reilly & Neumann, 2013; Wai et al., 2018).

Numerical, mechanical, and spatial reasoning are important predictors of performance in the STEM fields (Brunner et al., 2008; Ganley & Vasilyeva, 2011; Lemos et al., 2013; Wai et al., 2018, 2009) but, if it is solely used to select individuals for STEM fields it could lead to adverse impact against women.

RECOMMENDATIONS

Similar to the recommendations to reduce adverse impact based on ethnic group differences in cognitive ability (Outtz, 2002), other assessments, such as career interest, should also be included to identify women that are highly interested in and, subsequently, motivated to perform in the STEM fields (Nye et al., 2018).

Motivation provides incremental validity over cognitive ability in predicting job performance (Van Iddekinge et al., 2018). Finally, professionals must keep their bias in check when assessing and reporting the results of candidates, by not endorsing gender stereotypes (Aguinis et al., 2018).

Conclusion

Cognitive ability is a well-defined construct, in most cases cost-effective, and highly predictive of job and training performance.

A wide variety of cognitive ability tests exists and given an effective classification system, practitioners might be more empowered to select batteries of cognitive assessments that might speak directly to specific occupational needs.

That said, it remains meaningful to extract a general factor from a series of specific cognitive ability assessments, which are more likely to be predictive of job and training performance.

Finally, there is an artful science behind the use of cognitive ability for the prediction of job and training performance and practitioners might greatly benefit from further readings to craft predictive algorithms for occupations.

References

Aguinis, H., Culpepper, S. A., & Pierce, C. A. (2016). Differential prediction generalization in college admissions testing. Journal of Educational Psychology, 108(7), 1045–1059. https://doi.org/10.1037/edu0000104

Aguinis, H., Ji, Y. H., & Joo, H. (2018). Gender productivity gap among star performers in STEM and other scientific fields. Journal of Applied Psychology, 103(12), 1283–1306. https://doi.org/10.1037/apl0000331

Alonso, A., Viswesvaran, C., & Sanchez, J. I. (2008). The mediating effects of task and contextual performance. In J. Deller (Ed.), Research contributions to personality at work. Rainer Hampp.

Antonakis, J., House, R. J., & Simonton, D. K. (2017). Can super smart leaders suffer from too much of a good thing? The curvilinear effect of intelligence on perceived leadership behavior. Journal of Applied Psychology, 102(7), 1003–1021. https://doi.org/10.1037/apl0000221